2020Glass, G.V, Mathis, W.J., & Berliner, D.C. (Sept. 29, 2020). NEPC Review: Student Assessment During COVID-19. Boulder, CO: National Education Policy Center.

Gene V Glass

National Education Policy Center Student Assessment during COVID-19 Laura Jimenez Center for American Progress September 10, 2020 SUMMARY School closings and the ever-increasing number of deaths provide the backdrop for a proposal by the Center for American Progress (CAP) to deny waivers of the federally mandated administration of standardized tests in Spring 2021. Further, the federal government proposes to add to those assessments in ways that CAP argues would make the test results more useful. In its recent report, CAP sides with the Department of Education's policy of denying such requests for waivers, and it calls for additional assessments that "capture multiple aspects of student well-being, including social-emotional needs, engagement, and conditions for learning" as well as supplementary gathering of student information. The report contends this will assure greater equity in the time of the pandemic, supposedly through the addition of the new measures to annual assessments. Although there have been attempts in the past at multi-variable, test-based accountability schemes, the report endorses this less-than-successful approach, citing studies that do not address the complexity of the undertaking or the effects of its implementation. Considering the massive disruption now occurring in schools and the limited utility of standardized tests even in ordinary times, state agencies and local districts are too hard-pressed by fiscal and time demands and the ramping up of health costs to consider even more costly programs of dubious value. For these reasons, the CAP proposal is ill-timed, unrealistic, and inappropriate for dealing with the exigencies arising from the pandemic. I. Introduction With the inexorable ticking of the pandemic death count, historic political unrest in the nation, floods and fires of biblical proportions, and racial unrest, the citizenry searches for respite and a return to better times. It is not hyperbole to say that the nation's democratic institutions are in a state of crisis. The electorate divides, and traditional political stances harden. When the nation's public schools closed early in the Spring of 2020 due to the coronavirus pandemic, education was inescapably drawn into the debate. Public education, the historic reflector as well as the shaper of democracy, saw one wing claiming the nation's educational ills could be healed by continuing to employ high stakes testing and punitive accountability schemes. On the other end of the spectrum, progressives asked schools to embrace practices that support heightened learning and address socio-emotional needs. Into this debate, the Center for American Progress (CAP) released their report, Student Assessment during COVID-19. (Note 1) Directed by a Board of liberal politicians - Tom Daschle, Stacey Abrams, John Podesta, and others - the Center surprisingly finds itself closely allied with the opinion of the conservative Betsy DeVos-led Department of Education. She and the Department ordered states to administer standardized achievement tests in spring 2021 to all public school children, despite the dangers of COVID-19 and the uneven attendance (and enrollment) of many of these students. On September 3, 2020, DeVos sent a letter to the Council of Chief State School Officers informing them that no testing waivers would be granted. (Note 2) The annual every-student testing program has become a common feature of the public education landscape since the passage of NCLB and its successor ESSA. Billions of dollars from the coffers of state agencies and local school districts are spent administering tests required by the federal government if they are to maintain eligibility for federal support. But the costs of mandated state assessments are not solely those levied by the education assessment industry. Annual assessments cost teachers and students weeks focused on "teaching to the tests" and then administering them. State assessments for accountability have the power to turn the activities of the classroom away from a curriculum valued by educators and toward the content of the commercial paper-and-pencil tests. II. Findings and Conclusions of the CAP Report The tenor of the report reviewed here proves to be quite different from what one might assume from its statement supporting testing in 2021. After contrasting the circumstances of spring 2020 when waivers from annual assessment were granted and with what may likely be the circumstances under which the public schools operate in 2021, the report goes on to state: States have sufficient time to plan how to administer not just the state academic assessment next year [2021], but also to establish protocols through which schools can gather additionally critical information about students. A wider spectrum of data can better guide principals, teachers, and families in fulfilling students' needs this school year, which continued disruptions will almost certainly exacerbate until students can return to the classroom (p. 1).It takes an act of blind optimism to expect that schools will be able to pull off such state assessment in 2021, plus have the time and resources to collect other critical information. It is safe to say that neither CAP, the USDOE, nor any of the 50 states knows how many schools will be operating in one form or another at the end of the year. However, the assessment that CAP calls for has little in common with the multiple-choice achievement tests that typify state mandated testing in the post-NCLB era: Assessing refers to the process of collecting data about students in all forms, including academic and nonacademic information .... the author is referring to the entire process of administering assessments and collecting data about student performance through an array of methodologies [including qualitative methods] (p. 2).At this point in the report, it becomes obvious that the thing it calls "assessment" differs markedly in purposes and form from the contemporary practice of state mandated testing. To the burden of delivering instruction in new and unusual ways due to the pandemic, CAP wishes to add the burden of redesigning state assessment systems to include nonacademic data and a new "array of methodologies." The theme of expanding assessment to include new methods and purposes permeates the report - e.g., evaluate impacts of the pandemic and alternative methods of delivering instruction, "assess social-emotional needs, student engagement and attendance, and family engagement." It is addressed less to the question of whether 2021 assessments would be worthwhile, and instead advocates a thorough redesign of education assessment and its purposes. Schools and districts caught in this unanswered dilemma will find little to illuminate their decisions here. III. The Report's Rationale for its Findings and Conclusions The report's rationale is vague and reads: "Parents, educators, administrators, and policymakers need more information about how students are doing and being served, not less." The purpose is to "better understand and address . . . the gaps that have been made worse by the coronavirus pandemic" (p. 2). Notably absent is any rationale for either the practicality or the purpose of this expanded assessment. Also absent is any reference to the inequitable and unequal resourcing of our urban schools. Further ignored is any evidence that assessment, in and of itself, improves educational outcomes. The report is remarkably free of specifics on what new data will be collected and used, offering only statements like "[b]efore students can learn, their well-being, engagement, and conditions for learning must be addressed, and in order to do so, schools must collect these data to inform how they should respond to the challenges raised by the COVID-19 pandemic" (p. 6). The reader is left to speculate on whether the document is designed to improve instruction and education or to serve some other purpose such as perpetuating the test-based accountability systems despite their meager record of success. IV. Use of the Literature There exists in the scholarly literature a plethora of research on the disruption to teaching and learning occasioned by outside mandated testing (Note 3), the redirection of the curriculum caused by mandated assessment (Note 4), the negative effect of such assessment on teachers' instructional approaches (Note 5), as well as the irrelevance of such activities to the practice of classroom teachers (Note 6). Scholars have raised strong objections to the mandated assessments connected to federal accountability programs for the purposes of diagnosing the needs of individual students or the efficacy of individual teachers (Note 7). The CAP report addresses none of this scholarship in shaping its recommendations. On one point, scholars will agree wholeheartedly with a CAP recommendation: "test results should not be used to formally rate teachers or schools" (p. 6). Test-based teacher evaluation - often through Value Added Measurement - is an abysmal failure (Note 8). If state assessment data cannot be used to "formally rate" teachers and schools, they cannot be used to analyze the multiple influences on pretest-posttest score gains. If they are so flawed, then they can hardly be expected to clarify questions about how the pandemic affected test scores, what modes of coping with the pandemic were more or less effective, and similar challenges of causal analysis that CAP puts forward. V. Usefulness of the Study for Policy Making In final form, the general recommendation offered by CAP is as follows: Administering the annual state academic assessments in their current form is likely not practical in the circumstances of next school year. That is why, starting now, states must work with their test vendors and technical advisory committees to identify what is feasible regarding the statewide annual assessment (p. 5).CAP notes six critical questions:

The report does not take account of the long development and validation timelines required by the test industry. No attention is given to the increase in home schooling and various choice mechanisms that would invalidate any data used for long-term baselines or measures of progress. VI. Validity of the Findings The CAP report is of little relevance in the current debate regarding continuation of federally mandated assessments in 2021. However, when read as a critique of the current form of education assessment, there is value in its position. The report clearly sees current methods of assessment falling short of having utility for teachers and administrators. Test data, uninformed by the circumstances under which they have been collected, are of little value. If assessment is to contribute to the development of informed policy, test results must be contextualized by the addition of a great deal of data on students' schools, homes, and communities. The assessments imagined by the report do, in fact, "... need to capture multiple aspects of student well-being, including social-emotional needs, engagement, and conditions for learning ...." (p. 1). Of course, this is true but is never done. The sheer cost of such an ambitious plan relegates it to the dead letter file. Before the virus, our urban areas suffered great financial deficits. With Covid-19, schools will find hiring nurses, counselors, and teachers to be more imperative than expending funds on a testing system of little proven value. The report seeks to reinforce its recommendations for a different and better form of assessment by appealing to the need for greater equity in public education noting that "[t]he annual statewide assessment provides critical data to help measure equity in education" (p. 2). It is an arguable point whether state testing has exacerbated inequities among racial, ethnic, and socio-economic groups in the delivery of schooling. Test-based school improvement strategies have been common since the basic skills movement in the 1970s. Likewise, any number of researchers have examined multiple measures to tease out relevant variables. Yet, the achievement gap remains. Instead of a boon, the model assessment envisioned by CAP may be a bane. Test results are used by realtors and home-buyers in crypto-redlining that leads to greater segregation of public schools (Note 9). School choice has contributed to resegregation of public education by offering charter schools to white families seeking to flee diverse schools, is perversely sold as an "equity issue." Indeed, one can argue that mandated assessment in 2021 would be even more unfair than in the past. Poor children are likely to suffer the greatest loss in opportunities to learn and hence show the greatest deficits in test performance. It is an article of faith unsupported by history that these deficits would prompt greater efforts at remediation. One can imagine instead increased use of "retention in grade" as a result of lowered test performance, a practice shown repeatedly not to be in the interest of the future success of those retained (Note 10). To the extent that the nation needs to know how badly the COVID-19 pandemic affected school learning, that question may be addressed by the 2021 National Assessment of Educational Progress (NAEP) - at least in so far as NAEP's methods permit such causal analysis. The NAEP's Governing Board voted 12 to 10 in favor of administering NAEP in 2021. That ten NAGB Board members demurred is in itself noteworthy. And there is some sense to moving forward with NAEP. NAEP tests fewer than 1 in 1,000 students in grades 4, 8, and 12. Its disruption to the curriculum is minimal. The results might help clarify the extent of the devastation of the coronavirus, though a clear and convincing answer is anything but guaranteed. VI. Usefulness of the Report Paradoxically, the shortcomings of the report highlight the case for suspending the federally mandated state assessments for the 2020-21 school year. It is already apparent only weeks into the academic year that the current school year will operate under unusual circumstances: start times will differ not only among states but among districts within states; the suspension of face-to-face instruction that occurred in spring 2020 could well take place again in spring 2021; methods adopted by districts to cope with the pandemic will differ substantially. A promised benefit of spring 2021 assessments is the parsing of the effects of these multiple influences on test data. Unfortunately, this has been tried countless times under far more favorable circumstances and has not proven successful. An augmented assessment simply interferes with the schools' orderly recovery and strengthening from the multiple traumas of the past year. While it is hoped that serendipitous advantages will come to light, schools need vast latitudes - not expanded mandates from the federal government. Such mandates are not only tone deaf, they are disruptive and ill advised. For reasons contained in this CAP report, in fact, states should not have to request waivers of annual testing from the USDOE. The Department should announce suspension of the mandate without delay. Notes

|

Writings of Some General Interest, Not Readily Available Elsewhere. To receive a printable copy of an article, please email gvglass @ gmail.com.

Thursday, July 11, 2024

NEPC Review: Student Assessment During COVID-19

An Extendor Role for Nurses

1965

Morgan, J.R., Glass, G.V, Stevens, H.A., & Sindberg, R.M. (1965). A study of an extender role for nursing service personnel. Nursing Research, 14, 330-334.

Tuesday, July 9, 2024

Editorial, Review of Educational Research

1970

Gene V. Glass (1970). Editorial, Review of Educational Research, 40(3).The first volume of the Review of Educational Research which was published in 1931 contained a complete listing of all 329 members of the American Educational Research Association! AERA has grown to nearly 10,000 members, and educational research has grown with it. As the organization and the discipline have changed, AERA's publication program has changed. In 1964, the American Educational Research Journal was created as an outlet for original contributions to educational research. In April 1969, the Editorial Board of the Review of Educational Research proposed to the Association Council that the nearly forty-year old Review be reorganized so it might better serve the purposes of the Association's publications program. Since 1931, the Review has published solicited review manuscripts organized around a topic for each issue. Generally, a slate of fifteen topics was chosen by the Editorial Board and each topic was reviewed once each three years in one of the five issues per volume. An issue chairman was selected by the Editor and given the authority to choose chapters and authors for the issue. The Review has served well for many years both the discipline of educational research and the profession of education. As an organization and as a profession, we are grateful for the generosity and efforts of the more than 1,000 scholars who have contributed to the Review.

The purpose of the Review has always been the publication of critical, integrative reviews of published educational research. In the opinion of the Editorial Board, this goal can now best be achieved by pursuing a policy of publishing unsolicited reviews of research on topics of the contributor's choosing. In reorganizing the Review, AERA is not turning away from the task of periodically reviewing published research on a set of broad topics. The role played by the Review in the past will be assumed by an Annual Review of Educational Research, which AERA is currently planning. The reorganization of the Review of Educational Research is an acknowledgment of a need for an outlet for reviews of research that are initiated by individual researchers and shaped by the rapidly evolving interests of these scholars.

Knowledge about education is not increasing nearly as fast as is alleged, but the proliferation of the educational research literature is obvious. A body of literature can grow faster than a body of knowledge when it swells with false knowledge, inconclusive or contradictory findings,, repetitive writing or simple dross. If knowledge is not subjected to scrutiny, it cannot be held confidently to be true. Moreover, if knowledge is to be "known" it must be "packed down" into assimilable portions either in reviews of literature or in textbooks. The integration of isolated research reports and the criticism of published works serve an essential purpose in the growth of a discipline. The organization and maintenance of old knowledge is no less important than the discovery of new knowledge. It is hoped that the new editorial policy of the Review, with its implicit invitation to all scholars, will contribute to the improvement and growth of disciplined inquiry on education.

The third number of the fortieth volume of the Review is the first issue to be published under the new editorial policy.

Gene V Glass

Editor

Monday, July 8, 2024

Testing for competence: Translating reform policy into practice

1991

Ellwein, M. C. and Glass, G. V (1991). Testing for competence: Translating reform policy into practice. Educational Policy, 5(1), 64-78.

Sunday, July 7, 2024

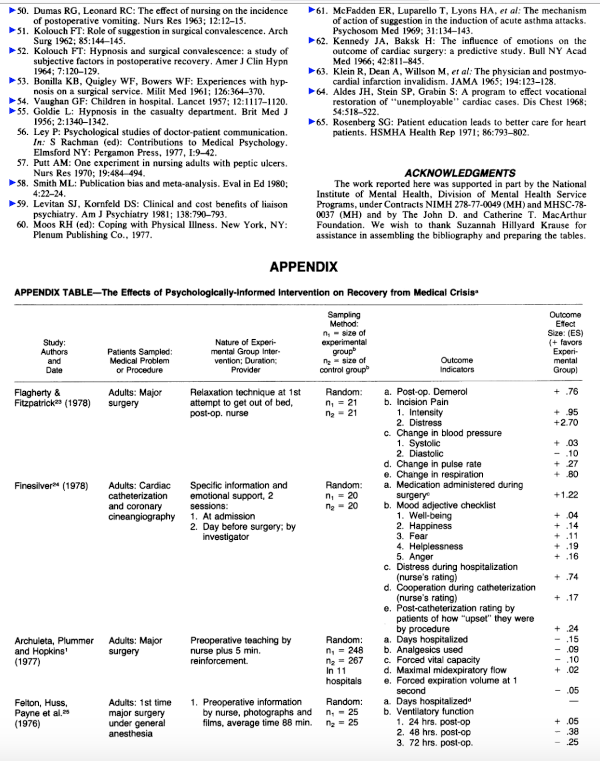

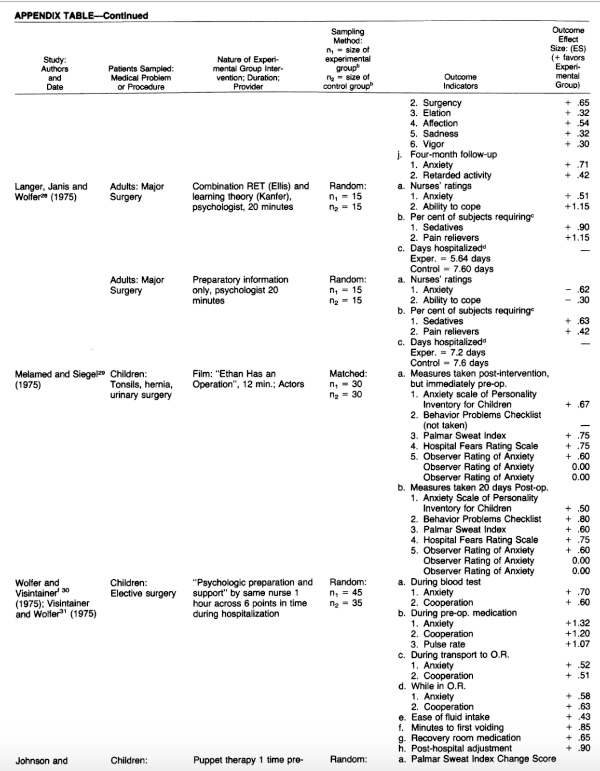

The Effects of Psychological Intervention on Recovery From Surgery and Heart Attacks

1982

From Surgery and Heart Attacks:

An Analysis of the Literature

EMILY MUMFORD

HERBERT J. SCHLESINGER

GENE V GLASS

University of Colorado School of Medicine

Thursday, July 4, 2024

Educational Evaluation and Research: Similarities and Differences

1970

Gene V. Glass and Blaine R. Worthen

Laboratory of Educational Research

University of Colorado

This paper is excerpted in large part from the authors' paper "Educational Inquiry and the Practice of Education," which is scheduled to appear chapter in Frameworks for Viewing Educational Research, Development, Diffusion, and Evaluation, ed. by H. Del Shalock (Monmouth, Oregon: Teaching Research Division of Oregon State System of Higher Education, press).Curriculum evaluation is complex. It is not a simple matter of stating behavioral objectives or building a test or analyzing some data, though it may include these. A thorough curriculum evaluation will contain elements of a dozen or more distinct activities. The mixture of activities that a particular evaluator concocts will, of course, be influenced by resources time, money, and expertise, the good-will of curriculum developers, and the like. But equally important (and more readily influenced) is what image the evaluator holds of his speciality: its responsibilities, duties, uniquenesses, and similarities to related endeavors. Some readers may think that entirely too much fuss is being made defining "evaluation." But we cannot help being concerned with the of words and, more importantly, with how they influence action. We frequently meet persons responsible for evaluating a program whose efforts are victimized by the particular semantic problem addressed paper. By happenstance, habit, or methodological bias, they may, for example label the trial and investigation of a new curriculum program with the epithet "research" or "experiment" instead of "evaluation." Moreover, the inquiry they conduct is different for their having chosen to call it a research project or an experiment, and not an evaluation. Their choice predisposes the literature they read (it will deal with research or experimental design), the consultants they call in (only acknowledged experts in designing and analyzing experiments), and how they report the results (always in the best tradition of the American Educational Research Journal or the Journal of Experimental Psychology). These are not the paths to relevant data or rational decision-making about curricula. Evaluation is an undertaking separate from research. Not every educational researcher can evaluate a new curriculum, anymore than every physiologist can perform a tonsilectomy.

Educational research and evaluation have much in common. However, since this is a time when the two are frequently confused with each other, there is a point in emphasizing their differences rather than their similarities. The best efforts of the investigator whose responsibility is evaluation but whose conceptual perspective is research may eventually prove to be worthless as either research or evaluation.

This paper represents an attempt to deal with one problem that should be resolved before much conceptual work on evaluation can proceed effectively-- that is, how to distinguish the more familiar educational research from the newer, less familiar activity of educational evaluation. In the sections that follow, we deal with three interrelated aspects of the problem: first, we attempt to define and distinguish between research and evaluation as general classes of educational inquiry, second, we consider the different types of research and evaluation within each general class, and third, we discuss eleven characteristics that differentiate research from evaluation.

In spite of the shortcomings of simple verbal definitions, such definitions can serve as a point of departure. Those that follow serve only as necessary precursors and will be elaborated and defined more fully through the discussion of each activity later in this paper.

a) Research is the activity aimed at obtaining generalizable knowledge by contriving and testing claims about relationships among variables or describing generalizable phenomena. This knowledge, which may result in theoretical models, functional relationships, or descriptions, may be obtained by empirical or other systematic methods and may or may not have immediate application.

b) Evaluation is the determination of the worth of a thing. It is the process of obtaining information for judging the worth of an educational program, product, procedure, or educational objective, or the potential utility of alternative approaches designed to attain specified objectives. According to Scriven: "The activity consists simply in gathering and combining of performance data with a weighted set of goal scales to yield either comparative or numerical ratings; and in the justification of (a) the data- gathering instruments, (b) the weightings, and (c) the selection of goals." [Note 1]

We have not defined research very differently from the way in which it is generally viewed (e.g., by Kerlinger, 1964). However, definitions of evaluation are much more varied [Note 2], and while most of these definitions are relevant to evaluation, in that they describe or define parts of the total evaluation process or activities attendant on evaluation, they seem to address only obliquely what is for us the touchstone of evaluation: the determination of merit or worth. Our definition is intended to focus directly on the systematic collection and analysis of information to determine the worth of a thing.

Working within this framework, the curriculum evaluator would first identify the curriculum goals and, using input from appropriate reference groups, determine whether or not the goals are good for the students, parents, and community served by the curriculum program. He would then collect evaluative information that bears on those goals as well as on identifiable side-effects that result from the program. When the data have been analyzed and interpreted, the evaluator would judge the worth of the curriculum and in most cases communicate this in the form of a recommendation to the individual or body ultimately responsible for making decisions about the program.

Both research and evaluation can be further divided into subactivities. The distinction between basic and applied research seems to be well entrenched in the parlance of educational research. Although these constructs might more properly be thought of as the ends of a continuum than as a dichotomy, they do have utility in differentiating between broad classes of activities. [Note 3] The distinction between the two also helps when the relationship of research to evaluation is considered. The United States National Science Foundation adopted the following definitions of basic and applied research:

Basic research is directed toward increase of knowledge; it is research where the primary aim of the investigator is a fuller understanding of the subject under study rather than a practical application thereof. Applied research is directed toward practical applications of knowledge. [Applied research projects] have specified commercial objectives with respect to either products or processes. [Note 4]Applied research, when successful, results in plans, blueprints, or directives for development in ways that basic research does not. In applied research, the knowledge produced must have almost immediate utility, whereas no such constraint is imposed on basic research. Basic research results in a deeper understanding of phenomena in systems of related phenomena, and the practical utility of the knowledge thus gained need not be foreseen.

Two activities that might be considered variants of applied research are institutional research and operations research, activities aimed at supplying institutions or social systems with data relevant to their operations. To the extent that the conclusions of inquiries of this type are generalizable, at least across time, these activities may appropriately be subsumed under the "research" rubric. However, where the object of the search becomes nongeneralizable information on performance characteristics of a specific program or process, the label "evaluation" might more appropriately be applied.

Evaluation has sometimes been considered merely a form of applied research that focuses only on one curriculum program, one course, or one lesson. This view ignores an obvious difference between the two--the level of generality of the knowledge produced. Applied, as opposed to basic, research is mission-oriented and aimed at producing knowledge relevant to providing a solution (generalizable) to a general problem. Evaluation is focused on collecting specific information relevant to a specific problem, program, or product.

It was mentioned earlier that many "types" of evaluation have been proposed in writings on the subject. For example, the process of needs analysis (identifying and comparing intended outcomes of a system with actual outcomes on specified variables) might well qualify as an evaluation activity if, as Scriven (1967) and Stake (1970) have suggested, the intended outcomes are themselves thoroughly evaluated. The assessment of alternative plans for attaining specified objectives might also be considered a unique evaluation function (see the discussion of "input" evaluation by Stufflebeam, 1968), although it seems to us that such an assessment might be considered a variant. form of outcome evaluation that occurs earlier in the temporal sequence and attempts to establish the worth of alternative plans for meeting desired goals. Other proposed evaluation activities such as "program monitoring" (Worthen & Gagne, 1969) or "process evaluation" (Stufflebeam, 1968) seem in retrospect to belong less to evaluation than to operations management or some other function in which information is collected but no evaluation occurs.

The difficulty inherent in deciding whether or not other activities of the types discussed above should be considered as "evaluation" may well stem from the conflict of the roles and goals of evaluation that was mentioned by Scriven (1967). Evaluation can contribute to the construction of a curriculum program, the prediction of academic success, the improvement of an existing course, or the analysis of a school district's need for compensatory education. But these are roles it can play and not the goal it seeks. The goal of evaluation must be to answer questions of selection, adoption, support, and worth of educational materials and activities. It must be directed toward answering such questions as, "Are the benefits of this curriculum program worth its cost?" or "Is this textbook superior to its competitors?" The typical evaluator is trained to play other roles besides evaluating. However, all of his activities (e.g., test construction, needs assessment, context description) do not become evaluation by merit of the fact that they are done by an evaluator. (Evaluators brush their teeth, but brushing teeth is not therefore evaluation.)

Unless inclusion of hybrid activities becomes essential to the point under consideration, the terms "research" and "evaluation" will be used in the remainder of this paper to refer to the "purest" type of each, basic research and outcome evaluation. That this approach results in over- simplification is admitted, but the alternative of attempting to discuss all possible nuances is certain to result in such complexity that the major points in this paper would be completely obfuscated.

Eleven characteristics of inquiry that distinguish research from evaluation are discussed below.

- Motivation of the Inquirer. Research and evaluation appear generally to be undertaken for different reasons. Research is pursued largely to satisfy curiosity; evaluation is done to contribute to the solution of a particular problem. The researcher is intrigued; the evaluator (or at least, his client) is concerned. The researcher may believe that his work has greater long-range payoff than the evaluator's. However, one must be nimble to avoid becoming bogged down in the seeming paradox that the policy decision to support basic inquiry because of its ultimate practical payoff does not imply that researchers are pursuing practical ends in their daily work.

- The Objectives of the Search. Research and evaluation seek different ends. Research seeks conclusions; evaluation leads to decisions (see Tukey, 1960). Cronbach and Suppes distinguish between decision-oriented and conclusion-oriented inquiry. In a decision-oriented study the investigator is asked to provide information wanted by a decision-maker: a school administrator, a government policy- maker, the manager of a project to develop a new biology textbook, or the like. The decision-oriented study is a commissioned study. The decision-maker believes that he needs information to guide his actions and he poses the question to the investigator. The conclusion-oriented study, on the other hand, takes its direction from the investigator's commitments and hunches. The educational decision-maker can, at most, arouse the investigator's interest in a problem. The latter formulates his own question, usually a general one rather than a question about a particular institution. The aim is to conceptualize and understand the chosen phenomenon; a particular finding is only a means to that end. Therefore, he concentrates on persons and settings that he expects to be enlightening. [Note 5] Conclusion-oriented inquiry is much like what is referred to here as research; decision-oriented inquiry typifies evaluation as well as any three words can.

- Laws versus Descriptions. Closely related to the distinction between conclusion-oriented and decision-oriented are the familiar concepts of nomothetic (law-giving) and idiographic (descriptive of the particular). Research is the quest for laws, that is, for statements of the relationships among two or more variables or phenomena. Evaluation merely seeks to describe a particular thing with respect to one or more scales of value.

- The Role of Explanation. The nomothetic and idiographic converge in the act of explanation, namely, in the conjoining of general laws with descriptions of particular circumstances, as in "if you like three-minute eggs back home in Vancouver you'd better ask for a five-minute egg at the Brown Palace in Denver because the boiling point of water is directly proportional to the absolute pressure [the law], and at 5,280 ft. the air pressure is so low in Denver that water boils at 1950F [the circumstances]." Scientific explanations require scientific laws, and the disciplines related to education appear to be far from the discovery of general laws on which explanations of incidents of schooling can be based. There is considerable confusion among investigators in education about the extent to which evaluators should explain ("understand") the phenomena they evaluate. A fully proper and useful evaluation can be conducted without producing an explanation of why the product or program being evaluated is good or bad or of how it operates to produce its effects. It is fortunate that this is so, since evaluation in education is so needed and credible explanations of educational phenomena are so rare.

- Autonomy of the Inquiry. The important principle that science is an independent and autonomous enterprise is well stated by Kaplan: It is one of the themes of this book that the various sciences, taken together, are not colonies subject to the governance of logic, methodology, philosophy of science, or any other discipline whatever, but are, and of right ought to be, free and independent. Following John Dewey, I shall refer to this declaration of scientific independence as the principle of autonomy of inquiry. It is the principle that the pursuit of truth is accountable to nothing and to no one not a part of that pursuit itself.

- Not surprisingly, autonomy of inquiry has proved to be an important characteristic typifying research. As Cronbach and Suppes have indicated, evaluation is undertaken at the behest of a client, while the researcher sets

his own problems. It will be seen later that the differing degree of autonomy that the researcher and the evaluator enjoy has implications for how they should be trained and how their respective inquiries are pursued. [Note 6] Properties of the Phenomena That Are Assessed. Evaluation seeks to assess social utility directly. Research may yield evidence of social utility, but only indirectly--because empirical verifiability of general phenomena and logical consistency may eventually be socially useful. A touchstone for discriminating between an evaluator and a researcher is to ask whether the inquiry would be regarded as a failure if it produced no information on whether the phenomenon studied was useful or useless. A researcher answering qua researcher would probably say "No." Inquiry may be seen as directed toward the assessment of three properties of statements about a phenomenon: its empirical verifiability by accepted methods, its logical consistency with other accepted or known facts, and its social utility. Most disciplined inquiry aims to assess each property in varying degrees. In Figure 1, several areas of inquiry within psychology are classified with respect to the degree to which they seek to assess each of the above three properties. Their distance from each vertex is inversely related to the extent to which they seek the property it represents. [Note 7]

- "Universality" of the Phenomena Studied. Perhaps the highest correlate of the research-evaluation distinction is the "universality" of the phenomena being studied. (We apologize for the grandness of the term "universal" and our inability to find a more modest one to convey the same meaning.) Researchers work with constructs having a currency and scope of application that make the objects one evaluates seem parochial by comparison. An educational psychologist experiments with "reinforcement" or "need achievement," which he regards as neither specific to geography nor to one point in time. The effects of positive reinforcement following upon a response are assumed to be phenomena shared by most men in most times; moreover the number of specific instances of human behavior that are examples of the working of positive reinforcement is great. Not so with the phenomena studied in evaluation. A particular textbook, an organizational plan, and a filmstrip have a short life expectancy and may not be widely shared. However, whenever their cost or potential payoff rises above negligible level, they are of interest to the evaluator. Three aspects of the generalizability ("universality") of a phenomenon can be identified: generality across time (Will the phenomenon--a textbook, "self-concept," etc.--be of interest fifty years hence?); generality across geography (Is the phenomenon of any interest to people in the next town, the next state, across the ocean?); applicability of the general phenomenon to a number of specific instances (Are there many specific examples of the phenomenon being studied or is this the "one and only"?). These three features of the object of an educational inquiry can be used to classify different inquiry types. Three types of inquiry are program evaluation (the evaluation of a complex of people, materials and organization that make up a particular educational program), product evaluation (the evaluation of a medium of schooling, such as a book, a film, or a recorded tape) and educational research. Program evaluation is depicted as concerned with a phenomenon (an "educational program") that has limited generalizability across time and geography. For example, the innovative "ecology curriculum" (including instructional materials, staff, students, and other courses in the school) in the Middletown Public Schools will probably not survive the decade; it is of little interest to the schools in Norfolk, which have a different set of environmental problems and instructional resources, and has little relation- ship to other curricula with other objectives. Product evaluation is concerned with assessing the worth of something, such as a new ecology textbook or an overhead projector, which can be widely disseminated geographically but will not be of interest ten years hence or produce any reliable knowledge about the educational process in general if its properties are studied. The concepts upon which educational research is carried out are supposed to be relatively permanent and applicable to schooling nearly everywhere. As such they should subsume a large number of instances of teaching and learning.

- Salience of the Value Question. At least in theory, a value can be placed on the outcome of an inquiry, and all inquiry is directed toward the discovery of something worthwhile and useful. In what we are calling evaluation, it is usually quite clear that some question of value is being addressed. Indeed, in evaluation, value questions are the sine qua non, and usually they determine what information is sought, whereas in research they are not the direct object. This is not to say that value questions are not germane in research, however. The goals may be the same in both research and evaluation--placing values on alternative explanations or hypotheses--but the roles, the use to which information may be put, may be quite different. For example, the acquisition of knowledge and improvement of self-concept are clearly value laden. The value question in the derivation of a new oblique transformation technique in factor analysis is not so obvious, but it is there nonetheless. Our purpose in raising this point is to call attention to the fact that, with respect to assessing the value of things, the difference between research and evaluation is one of degree, not of kind.

- Investigative Techniques. Many have recently expressed the opinion that research and evaluation should employ different techniques for gathering and processing data, that the methods appropriate to research--such as comparative experimental design--are not appropriate to evaluation, or that, with respect to techniques of empirical inquiry, evaluation is a thing apart. [Note 8] These arguments have been reviewed and answered elsewhere (Glass, 1968). We shall not cover old ground here; we wish simply to note that while there may be legitimate differences between research and evaluation methods (Worthen, 1968), we see far more similarities than differences between research and evaluation with regard to the techniques by which empirical evidence is collected and judged to be sound. As Stake and Denny have indicated: "The distinction between research and evaluation can be overstated as well as understated.... Research and evaluators work within the same inquiry paradigm.... [Training programs for] both must include skill development in general educational research methodology. ," [Note 9] Hemphill has expressed the same opinion: The consequence of the differences between the proper function of evaluation studies and research studies is not to be found in differences in the subject interest or in the methods of inquiry of the researcher and of the evaluator." [Note 10] The notion that evaluation is really only sloppy research has a low incidence in writing but a high incidence in conversation--usually among researchers but also among some evaluators. This bit of slander arises from misconstruing the concept of "experimental control." One form of experimental control is achieved by the randomization of extraneous influences in a comparative experiment so that the effect of a possibly gross intervention (or treatment) on a dependent variable can be observed. Such control can be achieved either in the laboratory or the field; achieving it is a simple matter of designing an internally valid plan for assigning experimental units to treatment conditions. Basic research has no proprietary rights to such experimental control; it can be attained in the comparative study of two reinforcement schedules as well as in the comparative study of two curricula. The second form of control concerns the ability of the experimenter to probe the complex of conditions he creates when he intervenes to set up an "independent variable" and to determine which critical element in a swarm of elements is fundamental in the causal relationship between the independent and dependent variables. Control of this type occupies the greater part of the efforts of the researcher to gain understanding; however, it is properly of little concern to the evaluator. It is enough for the evaluator to know that something attendant upon the installation of Curriculum A (and not an extraneous, "uncontrolled" influence unrelated to the curriculum) is responsible for the valued outcome. To give a more definite answer about what that something is would carry evaluation into analytical research. Analytical research on the non-generalizable phenomena of evaluation is seldom worth the expense. It is only in this sense that evaluation (in the abstract) can be sloppy.

- Criteria for Judging the Activity. The two most important criteria for judging the adequacy of research are internal validity (to what extent the results of the study are unequivocal and not confounded with extraneous or systematic error variance) and external validity (to what extent the results can be generalized to other units--subjects, classrooms, etc.--with characteristics similar to those used in the study). If one were forced to choose the two most important of the several criteria that might be used for judging the adequacy of evaluation, they would probably be isomorphism (to what extent the information obtained is isomorphic with the reality-based information desired) and credibility (to what extent the information is viewed as believable by clients who need to use the information).

- Disciplinary Base. The call to make educational research multi- disciplinary is good advice for the research community as a whole; it is doubtful that the individual researcher is well advised, however, to attack his area of interest simultaneously from several different disciplinary bases. Some researchers can fruitfully work in the cracks between disciplines; but most will find it challenge enough to deal with the problems of education from the perspective of one discipline at a time. The specialization required to master even a small corner of a discipline works against the wish to make Leonardos of all educational researchers. That the educational researcher can afford to pursue inquiry within one paradigm and the evaluator cannot is one of many consequences of the autonomy of inquiry. When one is free to define his own problems for solution (as the researcher is), he seldom asks a question that takes him outside of the discipline in which he was trained. Psychologists pose questions that can be solved by the methods of psychology, as do sociologists, economists, and other scientists, each to his own. The seeds of the answer to a research question are planted with the question. The curriculum evaluator enjoys less freedom in the definition of the questions he must answer. Hence, the answers are not as likely to be found by use of a stereotyped methodology. Typically, then, the evaluator finds it necessary to employ a wider range of inquiry perspectives and techniques to deal with questions that do not have predestined answers. [Note 11]

To state as succinctly as possible the views that have led us to take the positions presented previously:

- Before adequate evaluation theory can be developed, it is necessary to differentiate clearly between evaluation and research.

- Distinguishing evaluation from research at an abstract level has direct implications for conducting evaluations and training evaluation personnel.

- Evaluation must include not only the collection and reporting of information that can be used for evaluative purposes, but also the actual judgment of worth.

- Evaluation and research are both disciplined inquiry activities that depend heavily on empirical inquiry. Evaluation draws more on philosophical inquiry and less on historical inquiry than does research.

- There are a variety of types of evaluation and research that differ from one another and increase the difficulty of distinguishing research from evaluation.

- More is to be gained at this time from drawing distinctions between evaluation and research than from emphasizing their communality. There are at least eleven characteristics of inquiry that distinguish evaluation from research. Each is important to the curriculum evaluator in guiding his approach to the evaluation of school programs and curriculum materials.

1. Scriven (1967, p. 40).

2. See, for example, definitions of evaluation provided (either explicitly or implicitly) in the following writings: Provus (1969), Scriven (-1967), Stake (1967), Stufflebeam et al. (1970), and Tyler (1949).

3. Guba and Clark (n.d.) argue that the basic distinction applied is dysfunctional and that the two kinds of activity do not rightfully belong on the same continuum. The authors admit to problems with this, as with any, classification scheme. However, attempts to replace such accepted distinctions with yet another classification system seem destined to meet with little more success than have attempts to discard the descriptors "Democrat" and "Republican" because of the wide variance within the political parties thus identified.

4. National Science Foundation (1960, p. 5).

5. Cronbach and Suppes (1969, pp. 20-21).

6. Kaplan (1964, pp. 3-6).

7. Since this conceptualization of inquiry was first presented (Glass, 1969), the authors have found some interesting corroboration of an authoritative sort. Definition 3(a) of "theory" in Webster's Third New International Dictionary is tripartite: "The coherent set of hypothetical, conceptual and pragmatic principles forming the general form of reference for a field of inquiry (as for deducing principles, formulating hypotheses for testing, undertaking actions)." The three inquiry activities in Webster's definition correspond closely to the three inquiry properties in Figure 1.

8. See, for example, Carroll (1965), Cronbach (1963), Guba and Stufflebeam (1968), and Stufflebeam (1968).

9. Stake and Denny (1969, p. 374).

10. Hemphill (1969, p. 220).

11. See Hastings (1969). The discussion has taken on an utopian tone. In reality much evaluation is becoming as stereotyped as most research. Evaluators often take part in asking the questions they will ultimately answer, and they are all too prone to generate questions out of a particular "evaluation model" (e.g., the Stake model, the CIPP model) rather than a "discipline." Stereotyping of method threatens evaluation as it threatens research (Glass, 1969).

REFERENCES

Carroll, J. B. (1965). School Learning Over the Long Haul. Learning and the Educational Process. Edited by J. D. Krumboltz. Chicago: Rand McNally.

Cronbach, L. J. (1963). Course improvement through evaluation. Teachers College Record, 64, 672-683.

Cronbach, L. J. and Suppes, P. (1969) Research for Tomorrow's Schools: Disciplined Inquiry for Education. New York: Macmillan.

Galfo, A. J. and Miller, E. (1970). Interpreting Educational Research. 2nd Ed. Dubuque, Iowa: Wm. C. Brown Co.

Glass, G. V. (1967). Reflections on Bloom's "Toward a Theory of Testing Which Includes Measurement-Evaluation-Assessment." Research Paper No. 8. Boulder, Colorado: Laboratory of Educational Research, University of Colorado.

Glass, G. V. (1968). Some Observations on Training Educational Researchers. Research Paper No. 22. Boulder, Colorado: Laboratory of Educational Research, University of Colorado.

Glass, G. V. (1969). The Growth of Evaluation Methodology. Research Paper No. 27. Boulder, Colorado: Laboratory of Educational Research, University of Colorado.

Guba, E. G. and Stufflebeam, D. L. (1968). Evaluation: The Process of Stimulating, Aiding, and Abetting Insightful Action. An address delivered at the Second National Symposium for Professors of Educational Research, Boulder, Colorado, November 21, 1968.

Guba, E. G. and Clark, D. L. (n.d.) Types of Educational Research. Columbus, Ohio: The Ohio State University (Mimeographed.)

Hastings, J. T. (1969). The kith and kin of educational measures. Journal of Educational Measurement, 6, 127-130.

Hemphill, J. K. (1969). The Relationship Between Research and Evaluation Studies. Educational Evaluation: New Roles, New Means. Edited by R. W. Tyler. The 68th Yearbook of the National Society for the Study of Education, Part II. Chicago, Ill.: National Society for the Study of Education.

Hillway, T. (1964). Introduction to Research. 2nd Ed. Boston: Houghton Mifflin.

Kaplan, A. (1964). The Conduct of Inquiry. San Francisco: Chandler.

Kerlinger, F. N. (1964). Foundations of Behavioral Research. New York: Holt, Rinehart & Winston.

National Science Foundation. (1960). Reviews of Data on Research and Development, No. 17. NSF-60-10.

Provus, M. (1969). Evaluation of Ongoing Programs in the Public Schools. Educational Evaluation: New Roles, New Means . Edited by R. W. Tyler. The 68th Yearbook of the National Society for the Study of Education, Part II. Chicago, Ill.: National Society for the Study of Education.

Scriven, M. (1958). Definitions, Explanations, and Theories. Minnesota Studies in the Philosophy of Science, Vol. 2. Edited by H. Feigl, M. Scriven, and G. Maxwell. Minneapolis, Minnesota: University of Minnesota Press.

Scriven, M. (1967). The Methodology of Evaluation. Perspectives of Curriculum Evaluation. Edited by R. E. Stake. Chicago: Rand McNally.

Stake, R. E. (1967). Educational Information and the Ever-Normal Granary. Research Paper No. 6. Boulder, Colorado: Laboratory of Educational Research, University of Colorado.

Stake, R. E. and Denny, T. (1969). Needed Concepts and Techniques for Utilizing More Fully the Potential of Evaluation." Educational Evaluation: New Roles, New Means. Edited by R. W. Tyler. The 68th Yearbook of the National Society for the Study of Education, Part II. Chicago, Ill.: National Society for the Study of Education, 1969.

Stake, R. E. (1970). Objectives, Priorities, and Other Judgment Data. Review of Educational Research, 40(2), 181-212.

Stufflebeam, D. L. (1968). Evaluation as Enlightenment for Decision-Making. Columbus, Ohio: Evaluation Center, Ohio State University.

Stufflebeam, D. L. et al. (1970). Educational Evaluation and Decision-Making. Columbus, Ohio: Evaluation Center, Ohio State University.

Tukey, J. W. (1960). Conclusions vs. decisions. Technometrics, 2, 423-433.

Tyler, R. W. (1949). Basic Principles of Curriculum and Instruction. Chicago: University of Chicago Press.

Worthen, B. R. (1968). Toward a taxonomy of evaluation designs. Educational Technology, 8(15), 3-9.

Worthen, B. R. and Gagne, R. M. (1969). The Development of a Classification System for Functions and Skills Required of Research and Research-Related Personnel in Education. Technical Paper No. 1. Boulder, Colorado: AERA Task Force on Research Training.

Monday, July 1, 2024

The Wisdom of Scientific Inquiry on Education

Gene V Glass

University of Colorado

This paper was written in 1970 and presented at a Convention of the National Association for Research on Science Teaching in Silver Spring, Maryland on 24 March 1971.

INTRODUCTION

A few years ago, Ralph Tyler assessed the state of research on science teaching and found it in need of a more theoretical, scientific perspective. My own assessment of the field now and then is diametrically opposed to Tyler's and, incidentally, to the position taken by Pella (1966) at about the same time Tyler wrote on this subject. For fear that a paraphrasing of Tyler's position might distort his meaning, I shall quote him at some length. Tyler's prescription for improved research in science education was as follows:

Theory is the all embracing end of basic research in seeking to provide a comprehensive map of the terrain of science education. Concepts are the smaller areas which comprise the total map, or to put the metaphor in another way, the complex of science education can be understood more readily by considering the concepts as major parts of the whole, and studying these parts in greater detail than is possible with the total.... The concepts and the dynamic models furnish the map which we seek in order to understand the factors involved in, and the process of science education. They form the major part of the theory....Tyler went on to name eleven prominent areas of "the map": 1) the objectives of science education; 2) the teaching-learning process; 3) the organization of learning experiences; 4) the outcomes of science education; 5) the student's development; 6) the development of teachers; 7) the objectives of education for science teachers; 8) the teaching-learning process of teacher education; 9) the outcomes of teacher education; 10) the organization of the teacher's learning experiences; 11) the process of change in science education. In my opinion, we should not strive to make research on science education or education generally more scientific. Indeed, we who call ourselves educational researchers should turn away from elucidatory inquiry in all areas of education. This type of inquiry, directed toward the construction of theories or models for the understanding and explanation of phenomena, should be left to the social and natural sciences because it is currently unproductive in education and is a profligate expenditure of precious resources of time, money, and talent. We should turn instead toward evaluative inquiry of educational developments which are the creations of masterful teachers inspired by what reliable knowledge exists in psychology, sociology, and the other sciences concerning the educating process.

My criticism of current research is its failure to be guided by, or to produce an adequate map of the factors and processes in science education.... The outlining, elaboration, and testing of such a map seems to me to be the necessary focus of our attention if we are to improve research in this field. (Tyler, 1967-1968, p. 43.)

My argument depends heavily on a distinction between two types of inquiry -- elucidatory and evaluative. The contrast between elucidation and evaluation is well established in aesthetics. Elucidatory aesthetics is the attempt to explain what constitutes art, or more generally beauty. Evaluative aesthetics concerns the discrimination between good and bad art -- what is beautiful and what is less beautiful -- without explanation of why an art work is good or bad. It is necessary to turn attention to the definitional problem and the related problem of distinguishing between elucidatory and evaluative inquiry.

ELUCIDATORY AND EVALUATIVE INQUIRY

Elucidatory inquiry is the process of obtaining generalizable knowledge by contriving and testing claims about relationships among variables or generalizable phenomena. Apologies to mathematics, history, philosophy, etc. for the obvious empirical social science bias in the definition and the thinking to follow. This knowledge results in functional or statistical relationships, models, and ultimately theories. When the results of elucidatory inquiry are combined with knowledge of particular circumstances, one obtains explanations, in the sense of Braithwaite (1953).

Evaluative inquiry is the determination of the worth of a thing. In education, it involves obtaining information to judge the worth of a program, product, or procedure. According to Scriven (1967, p. 40), "The activity consists simply in gathering and combining of performance data with a weighted set of goal scales to yield either comparative or numerical ratings; and in the justification of (a) the data-gathering instruments,(b) the weightings, and (c) the selection of goals."

Elucidatory and evaluative inquiry have many defining characteristics. Each is only imperfectly correlated with the tendency of informed persons to call activity A "elucidatory" and activity B "evaluative" just as clinical psychologists use "anxiety" as a construct to differentiate instances of behavior in a way that is not perfectly reproduced by a single measure or defining characteristic. The conceptualizations of elucidatory and evaluative inquiry are enriched by the identification of any characteristic of inquiry which has a non-zero correlation with the tendency of intelligent persons to speak of "elucidation" or "evaluation" when discussing a particular inquiry activity. Scriven (1958, p. 175) referred to such terms as "cluster concepts" or "correlational concepts." Such concepts, e.g., "schizophrenia," are known by their indicators all of which are imperfectly related to them.

CHARACTERISTICS OF INQUIRY

Nine characteristics of inquiry which distinguish elucidation from evaluation are recognizable.

[The next eighteen paragraphs are based on a segment of the author's paper with Blaine R. Worthen entitled "Educational Inquiry and the Practice of Education" which will appear as a chapter in Shalock, H. Del (Ed.) Frameworks for Viewing Educational Research & Development, Diffusion and Evaluation. Monmouth, Oregon; Teaching Research Division of Oregon State System of Higher Education.(In press)]

1. Motivation of the Inquirer

Elucidatory and evaluative inquiry appear generally to be undertaken for different reasons. The former is pursued largely to satisfy curiosity; the latter is done to contribute to the solution of a practical problem. The theory builder is intrigued; evaluators (or at least their clients) are concerned with worth and value.

Although the elucidatory inquirers may believe that their work has greater long-range payoff than the evaluator's, they are no less motivated by curiosity when performing their unique function. One must be nimble to avoid becoming bogged down in the seeming paradox that the policy decision to support basic inquiry because of its ultimate practical payoff does not imply that basic inquirers are pursuing practical ends in their daily work. Scriven (1969) argues that as regards research in mathematics, practical pay-off is increased to the extent that the mathematician is convinced he or she is not seeking practically significant results.

2. The Objective of the Search

Elucidatory and evaluative inquiry seek different ends. The former seeks conclusions; evaluation leads to decisions (see Tukey, 1960). Cronbach and Suppes (1969, pp. 20-21) distinguished between decision-oriented and conclusion-oriented inquiry.

In a decision-oriented study the investigator is asked to provide information wanted by a decision-maker: a school administrator, a government policy-maker, the manager of a project to develop a new biology textbook, or the like. The decision-oriented study is a commissioned study. Decision-makers believe that they need information to guide their actions and they pose the question to the investigator. The conclusion-oriented study, on the other hand, takes its direction from the investigator's commitments and hunches. The educational decision-maker can, at most, arouse the investigator's interest in a problem. The latter formulates the question, usually a general one rather than a question about a particular institution. The aim is to conceptualize and understand the chosen phenomenon; a particular finding is only a means to that end.

Conclusion-oriented inquiry is much like what is here referred to as elucidatory inquiry; decision-oriented inquiry typifies evaluative inquiry as well as any three words can.

3. Laws vs. Description

Closely related to the distinction between conclusion-oriented and decision-oriented are the familiar concepts of nomothetic (law-giving) and idiographic (descriptive of the particular). Elucidatory inquiry is the search for laws, i.e., statements of relationship among two or more variables or phenomena. Evaluation merely seeks to describe a particular thing with respect to one or more scales of value.

4. The Role of Explanation

Scientific explanations require scientific laws, and the disciplines related to education appear to far from discovery of the general laws on which explanations of incidents of schooling can be based. Explanations are not the goal of evaluation. A fully proper and useful evaluation can be conducted without producing an explanation of why the product or program being evaluated is good or bad or how it operates to produce its effects.

Elucidatory inquiry is characterized by a succession of studies in which greater control ("control" in the sense of the ability to manipulate specific components of independent variables) is exercised at each stage so that relationships among variables can be determined at more fundamental levels. Science seems to be an endless search for subsurface explanations, i.e., accountings of surface phenomena in terms of relationships among variables at a more subtle, covert level. Subsurface explanations are not sought after for themselves, or else the ultimate goal of science is some personal aberration, such as the science of Lawsonomy, a bizarre construction of an oddball inventor from Milwaukee. They are sought because greater precision, greater trust, the answer to the next "why?" always seems to be one stratum below the phenomena we see now. When ethologists find that the swallows return to Capistrano each March 19 because they are following the insects they feed on, they immediately ask why the insects return on March 19; and so it goes. The history of learning psychology is an excellent illustration of the continual search for subsurface explanations, though countless examples could be found in any science. Psychologists have sought increasingly more fundamental explanations of learning along a path that has led through the Law ofEffect, unravelings of the nature of reward, drive states, secondary reinforcement, and which leads inexorably toward brain physiology and the void beyond. Elucidatory inquiry chases subsurface explanations. However, it is usually enough for the evaluator to know that something attendant upon the installation of Harvard Project Physics (and not an extraneous, "uncontrolled" influence unrelated to the curriculum) is responsible for the valued outcomes; to give a more definite answer about what that something is would carry evaluation into analytical research.

5. Autonomy of the Inquiry

The independence and autonomy of science is so important that Kaplan (1964, pp. 3-6) wrote first of it in his classic The Conduct of Inquiry:

It is one of the themes of this book that the various sciences, taken together, are not colonies subject to the governance of logic, methodology, philosophy of science, or any other discipline whatever, but are, and of right ought to be, free and independent. Following John Dewey, I shall refer to this declaration of scientific independence as the principle of autonomy of inquiry. It is the principle that the pursuit of truth is accountable to nothing and to no one not a part of that pursuit itself.Not surprisingly, autonomy of inquiry proves to be an important characteristic for distinguishing elucidatory and evaluative inquiry. As was seen incidentally in the above quote from Cronbach and Suppes, evaluation is undertaken at the behest of a client who expects that particular questions will be answered. Elucidatory inquiry must be free to follow leads which pique the curiosity of those who know most intimately the aspirations of the discipline.

6. Properties of the Pheonomena Which are Assessed

Evaluation is an attempt to assess the worth of a thing, and elucidatory inquiry is an attempt to assess scientific truth. Except that truth is highly valued and worthwhile, this distinction serves fairly well to discriminate elucidatory and evaluative inquiry. The distinction can be given added meaning if "worth" is taken as synonymous with "social utility" (which is presumed to increase with improved health, happiness, life expectancy, increases in certain kinds of knowledge, etc., and decreases with increases in privation, sickness, ignorance, and the like) and if "scientific truth" is identified with two of its possible forms: 1) empirical verifiability of statements about general phenomena with accepted methods of inquiry; 2) logical consistency of such statements. Elucidatory inquires may yield evidence of social utility, but only indirectly -- because empirical verifiability of general phenomena and logical consistency may eventually be socially useful.

In this view, all inquiry is seen as directed toward the assessment of three properties of statements about phenomena: 1) their empirical verifiability by accepted methods; 2) their logical consistency with other accepted or known facts; 3) their social utility. Most disciplined inquiry aims to assess each property in varying degrees. The definition of "theory" in Webster's Third New International Dictionary, (definition 3.a (2)) is tripartite: "The coherent set of hypothetical, conceptual and pragmatic principles forming the general form of reference for a field of inquiry (as for deducing principles, formulating hypotheses for testing, undertaking actions)." The three inquiry activities in Webster's definition correspond closely to the three inquiry properties proposed here.

7. "Universality" of the Phenomena Studied

Perhaps the highest correlate of the elucidatory-evaluative distinction is the "universality" of the phenomena studied. The term universality is a bit grand, but it conveys a meaning that might have been

distorted by many more modest labels that suggest themselves. Elucidatory inquirers work with constructs having a currency and scope of application which make the objects one evaluates seem parochial by comparison. A psychologist experiments with "reinforcement" or "need achievement" which are regarded as neither specific to geography nor to one point in time. The effect of positive reinforcement following upon the responses that are observed is assumed to be a phenomenon shared by most persons in most times; moreover, the number of specific instances of human behavior which are examples of the working of positive reinforcement is great. Not so with the phenomena which educationists evaluate. A particular textbook, an organizational plan, and a film strip have a short life expectancy and may not be widely shared. However, whenever their cost or potential pay-off rises above a negligible level, they are of interest to the evaluator.

Three aspects of the "universality" of a phenomenon can be identified: 1) generality across time (Will the phenomenon -- a textbook, "Self-concept,"etc. -- be of interest fifty years hence?); 2) generality across geography (Is the phenomenon of any interest to people in the next town, the next state, across the ocean?); 3) applicability to a number of specific instances of the general phenomenon (Are there many specific examples of the phenomenon being studied or is this the "one and only"?).

8. Salience of the Value Question

At least in theory, a value can be placed on the outcome of any inquiry, and all inquiry is directed toward the discovery of something worthwhile and useful. In evaluation, it is usually quite clear that some question of value is being addressed. Indeed, value questions are the sine qua non of evaluative inquiry and usually determine what information is sought. This is not to say that value questions are not germane in elucidatory inquiry; they are just less obvious. The acquisition of knowledge of auto-mechanics or the inculcation of "good citizenship" are clearly value-laden endeavors. The value questions in the derivation of anew oblique transformation technique in factor analysis or the investigation of the transfer of information from short-term to long-term memory are not so obvious, but they are there nonetheless. With respect to assessing the value of things, the difference between elucidatory and evaluative inquiry is one of degree, not of kind.

9. Investigative Techniques

A substantial amount of opinion has been expressed recently to the effect that elucidatory and evaluative inquiry should employ different techniques for gathering and processing data, that the methods appropriate to elucidatory inquiry -- such as comparative experimental design -- are not appropriate to evaluation, or that with respect to techniques of empirical inquiry, evaluation is a thing apart. In fact, however, there are many more similarities than differences between elucidatory and evaluative inquiry with regard to the techniques by which empirical evidence is collated and judged to be sound. As Stake and Denny (1969, p. 374) indicated: "The distinction between research and evaluation can be overstated as well as understated. Researchers and evaluators work within the same inquiry paradigm .... [training programs for] both must include skill development in general educational research methodology."

Hemphill (1969, p. 220) expressed the same opinion when he wrote: "The consequence of the differences between the proper function of evaluation studies and research studies is not to be found in differences in the subject of interest or in the methods of inquiry of the researcher and of the evaluator."

RELATIONSHIP BETWEEN ELUCIDATORY AND EVALUATIVE INQUIRY

Evaluation borrows inquiry techniques and knowledge for recognizing value from basic sciences and contributes little in return. Methodological research in the social sciences produces the technologies of data collection and analysis that are so important to empirical educational evaluation. In addition, knowledge produced by basic elucidatory inquiry is often critical in determining whether a particular finding from an evaluative study counts as good or bad. For example, the evaluative meaning given to the finding that a health curriculum decreased the incidence of cigarette smoking among teenagers is dependent upon the extensive medical research which established a casual link between cigarette smoking and cancer and coronary disease.

The view of the relationship between elucidatory and evaluative inquiry presented here is parasitic; some see the two living symbiotically:

To some extent evaluative research may offer a bridge between 'pure' and 'applied' research. Evaluation may be viewed as a field test of the validity of cause-effect hypotheses in basic science whether these be in the field of biology (i.e., medicine) or sociology (i.e., social work). Action programs in any professional field should be based upon the best available scientific knowledge and theory of that field. As such, evaluations of the success or failure of these programs are intimately tied to the proof or disproof of such knowledge. Since such a knowledge base is the foundation of any action program, the evaluation research worker who approaches the task in the spirit of testing some theoretical proposition rather than a set of administrative practices will in the long run make the most significant contribution to the program development. (Suchman, 1967, p. 1970)Suchman saw "action programs" as based on scientific knowledge and theory; I see a more tenuous link between (a) what can be conceived of abstractly and established empirically in the laboratory and (b) what can be implemented in the field. There can hardly be said to exist an "intimate" connection between the success of a program in the field and some theory or hypotheses from a basic discipline. The tribal shaman may be effective for all of the wrong reasons, and a prototype turbine automobile may fail even though it is consistent in all particulars with physical theory.

One need not be able to explain phenomena to evaluate them. We can know how well or how poorly without knowing why. Understanding or elucidation is required neither in summative nor formative evaluation. We can perfectly well make summative judgments -- and we frequently do -- without understanding why one of the alternatives being evaluated scored highest on some weighted scale of value. In formative evaluation, observations of the quality of performance are made for the purpose of improving the thing that we are developing. It may seem that one can't very well make an improvement in the system unless one knows why performance was unacceptable. Otherwise, responses to data on substandard performance would be random stabs in the dark with little chance of success. If test scores indicate the prevalence of ignorance among tenth graders after studying a program unit on the relationship between birth rates, death rates, and population growth, the curriculum developers will act quite differently if they suspect the failure is due to the lack of practice in working problems rather than the complexity of the mathematics used to explain the relationship. Surely then, formative evaluation requires some greater knowledge of why performance is acceptable or unacceptable than does summative evaluation. But this greater knowledge is knowledge of particulars not codified general knowledge in the form of an abstract system of laws. We know why many things are as they are, even why somethings are good and others bad; and all of this knowledge is of particulars; it is of no general significance. My car continually loses front-end alignment because of the accident that sprung the frame; my electric razor works poorly because I dropped it on the bathroom floor. Both statements are explanations, but that doesn't make them of general interest. We pursue both formative and summative evaluation without the need to explain outcomes by reference to a system of general laws or relationships among variables.

PRETENTIONS TO SCIENTIFIC EDUCATION

The conclusion forces itself upon one that elucidatory inquiry on education over that past eighty years (from Joseph M. Rice to the greenest Ph.D. just now taking final orals) has been a failure for the most part. The areas that I would regard as distinctly educational research and not highly dependent on research in one of the behavioral or social sciences are such as the following: teacher behavior, teacher competence, classroom interaction, guidance theory, counseling, tests and measurement, media of instruction (e.g., television and movies), the organization of teaching (e.g., team teaching, cross-age teaching, and differentiated staffing), class size research, and many of the prominent areas of Ralph Tyler's (1967-68, p. 44) map of science education (the outcomes of science education, student development -- perhaps as opposed to human development in general -- the development of teachers, the objective of education for teachers, the teaching-learning process of teacher education, and the process of change in education, etc.).